Counterhate Arguments Extending EMNLP '23

Reproducing and extending the EMNLP '23 study on counterhate arguments for online hate speech.

---

Source Code available in the repository

Github handle: swetapati22

Project: Re-implementing Authentic Counterhate Arguments

---

Source Code: Click here

Overview

In this project, we replicate and extend the EMNLP 2023 paper “Finding Authentic Counterhate Arguments: A Case Study with Public Figures” by Albanyan, Hassan, and Blanco. This study focuses on identifying authentic counterhate arguments that effectively counter online hate speech targeted at specific individuals.

This work is part of the “Reproducibility Challenges in Research Papers” initiative and has been carefully documented in our Reproducibility Study Report by Sweta Pati and Swabhi Papneja.

In order to have a better understanding of our work, please go through a carefully prepared presentation.

Project Objective

Objective: To identify authentic counterhate arguments that directly refute the claims made in hateful tweets towards specific individuals. Counterhate responses should be logical, fact-based, and effective in deconstructing hateful rhetoric.

What is Hate Speech?

“A hateful speech, as per Twitter guidelines, includes any implicit or explicit tweet that attacks an individual’s gender, religion, race, ideology, or social class.”

What is Counterhate?

“Counterhate is a direct response that refutes hate speech. An authentic counterhate argument is a fact-based response that includes logical reasoning, testimonials, or statistical evidence.”

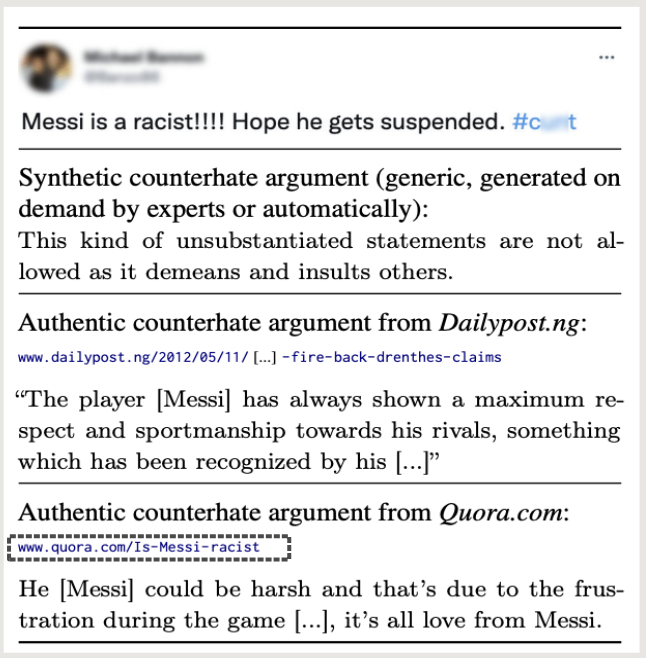

This here is an example of a Hateful tweet (top) and three replies with counterhate. In this paper, the authors find authentic counterhate arguments that address the hateful claims in the tweet at hand.

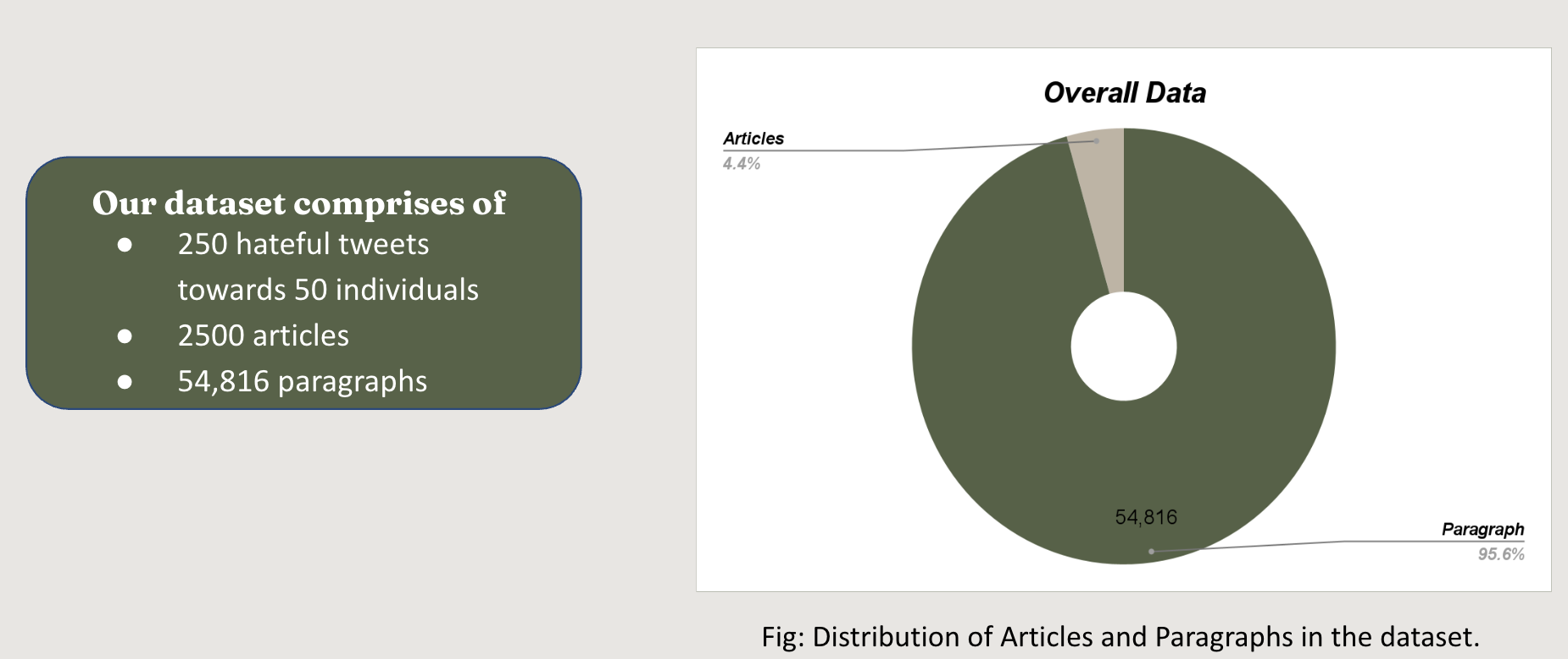

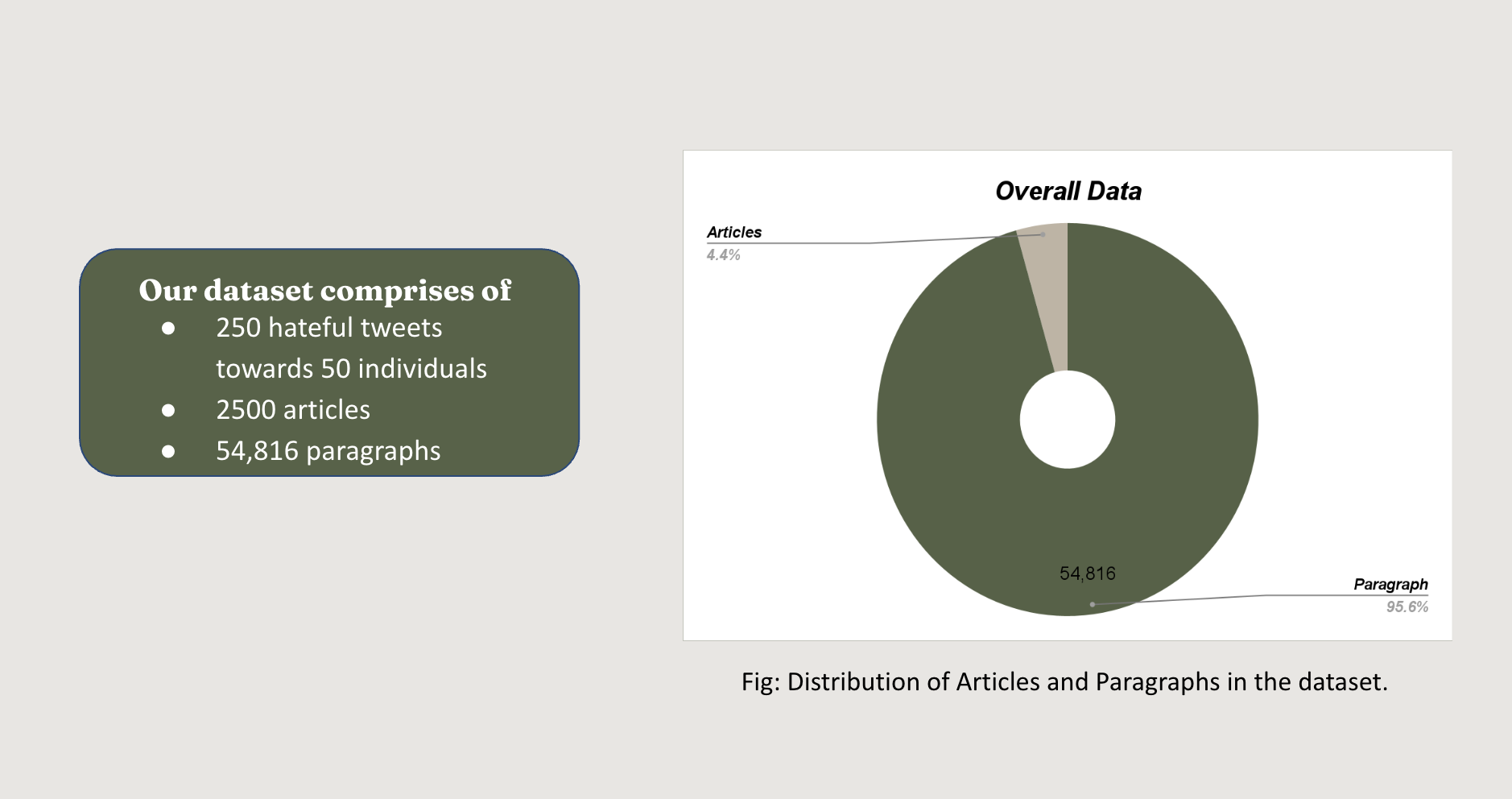

Dataset Overview

Our dataset is derived from hateful tweets directed at 50 individuals. It includes:

- 250 hateful tweets

- 2500 articles

- 54,816 paragraphs (collected as supporting evidence)

Approach & Methodology

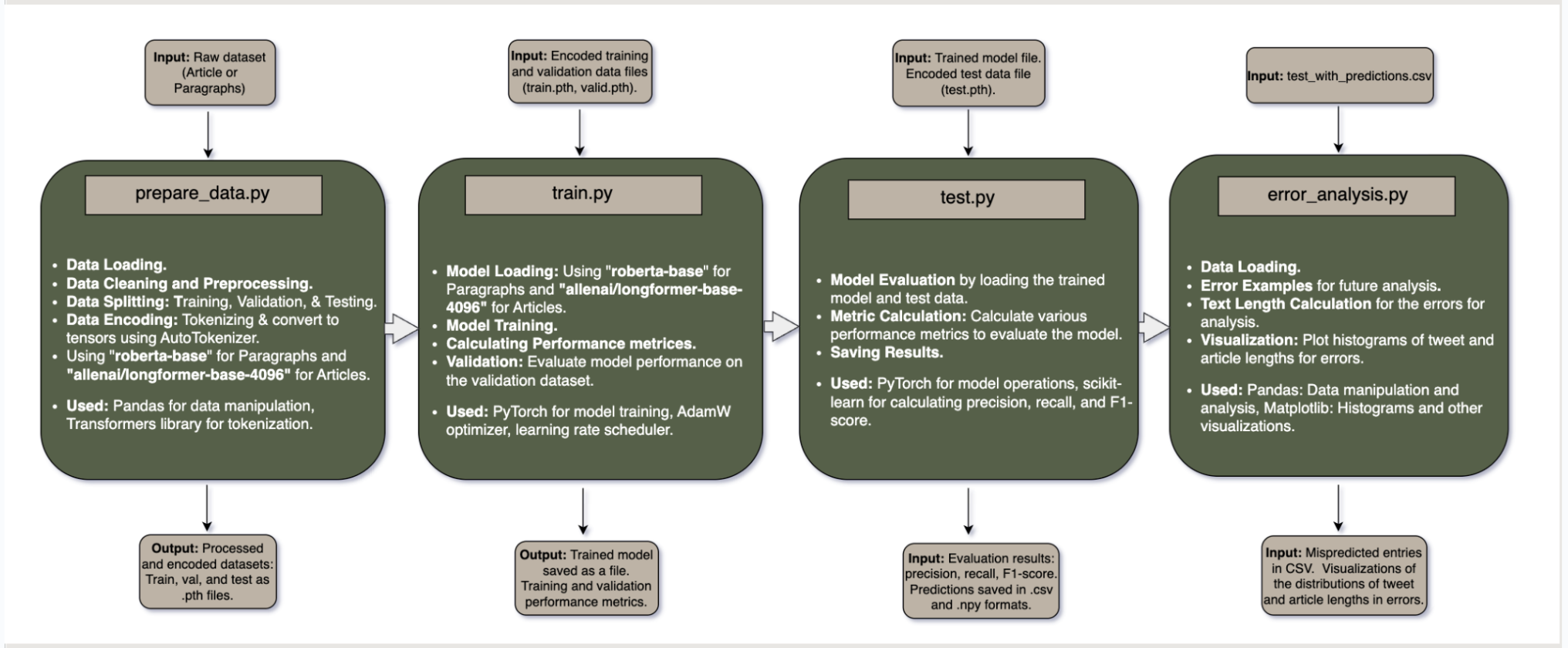

To ensure a structured analysis, we break down the workflow into four key stages:

Data Preparation

- Loads raw tweets, paragraphs, and articles.

- Cleans and tokenizes text using RoBERTa (for paragraphs) and Longformer (for articles).

- Splits into training, validation, and test sets.

Model Training

- Fine-tunes transformers-based models on the dataset.

- Implements Adaptive Learning Rate Scheduling with AdamW optimizer.

- Trains using a classification loss function.

Model Evaluation

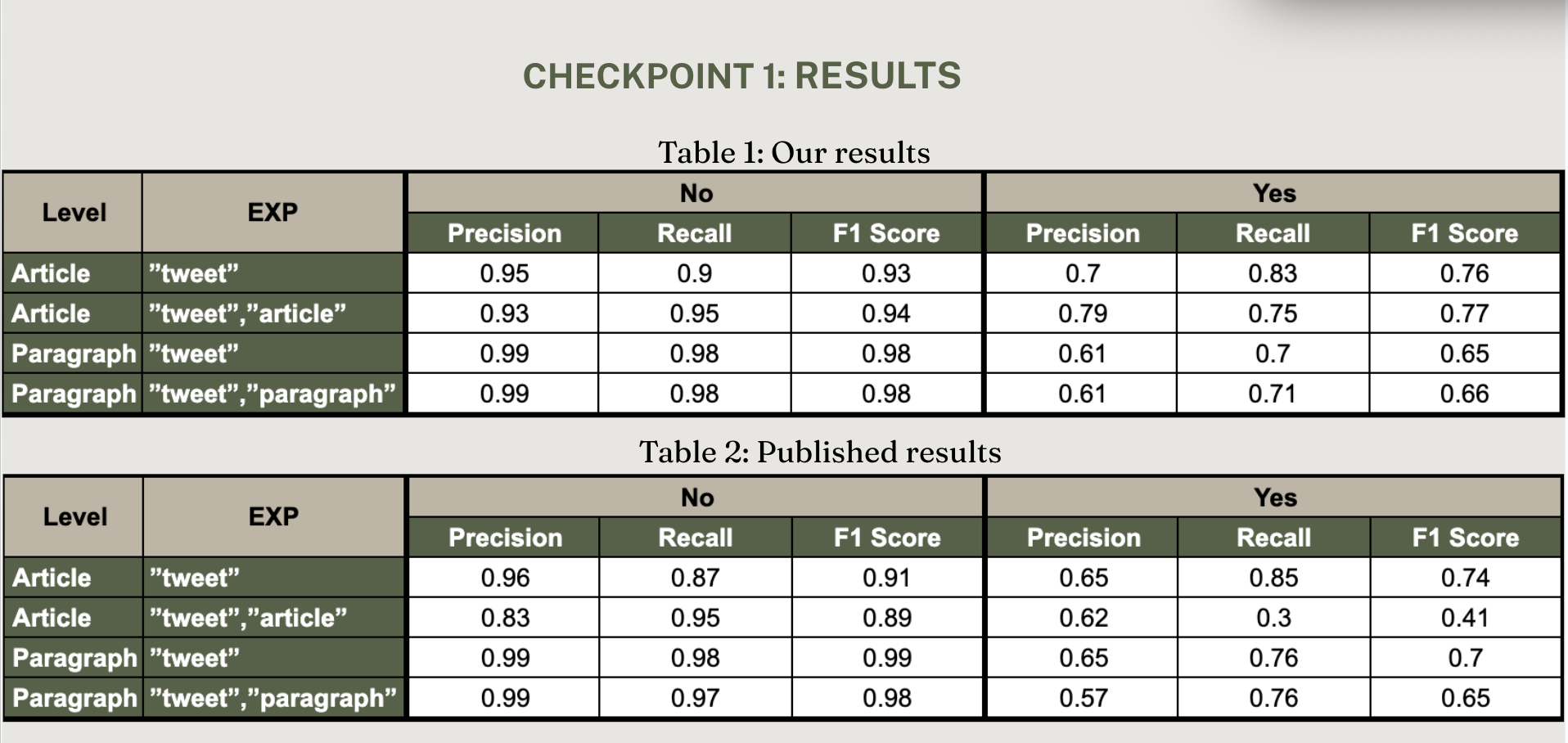

- Evaluates on Precision, Recall, and F1-score.

- Compares against published results.

Error Analysis & Interpretability

- Identifies misclassified samples to analyze weaknesses.

- Generates histograms to visualize text characteristics.

- Improves generalizability via error correction.

Experiments & Results

Dataset Splitting & Model Performance

The dataset is divided into article-level and paragraph-level experiments. We compare our model’s precision, recall, and F1-score against the original paper.

Our model achieves comparable performance to the original reported results.

How to Run the Project

Clone the Repository

git clone https://github.com/swetapati22/Counterhate_Arguments.git

Navigate to the Project Directory

cd Counterhate_Arguments

Install Dependencies

pip install -r requirements.txt

Data Preparation

python prepare_data.py --csv-file <path_to_csv_file> --level <level> --output-dir <output_directory>

Train the Model

python train.py --data-dir <processed_data_path> --level <level> --output-dir <output_path>

Evaluate the Model

python test.py --data-dir <processed_data_path> --trained-model-dir <trained_model_path> --output-dir <output_path>

Perform Error Analysis

python error_analysis.py --data-dir <processed_data_path> --trained-model-dir <trained_model_path> --output-dir <output_path>

Quick Run Scripts

Instead of running steps individually, execute:

-

article_script.shfor article-level processing. -

paragraph_script.shfor paragraph-level processing.

Key Takeaways

- Reproducibility Validated: Our results closely align with the original research.

- Counterhate is Crucial: Identifying logical, evidence-backed counterhate responses improves online discourse.

- Future Work: Exploring multilingual generalization of counterhate responses.

Citation

If you use this work, please cite:

@misc{papneja_pati_2024,

author = {Papneja, S. and Pati, S.},

title = {Implementing Authentic Counterhate Arguments},

year = {2024},

url = {https://github.com/swetapati22/Counterhate_Arguments},

note = {GitHub Repository}

}